爱可可AI论文推介(10月17日)( 三 )

文章插图

文章插图

文章插图

文章插图

文章插图

文章插图

5、[LG] Neograd: gradient descent with an adaptive learning rate

【爱可可AI论文推介(10月17日)】M F. Zimmer

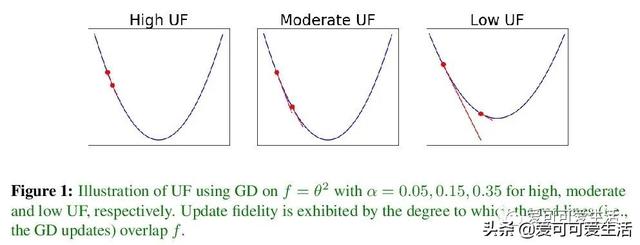

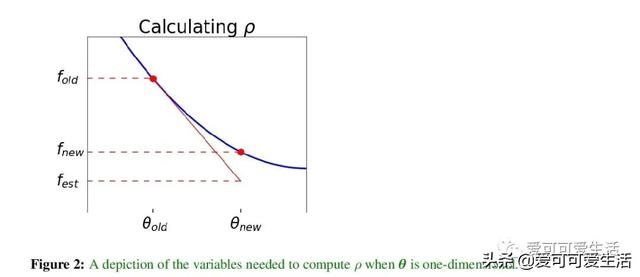

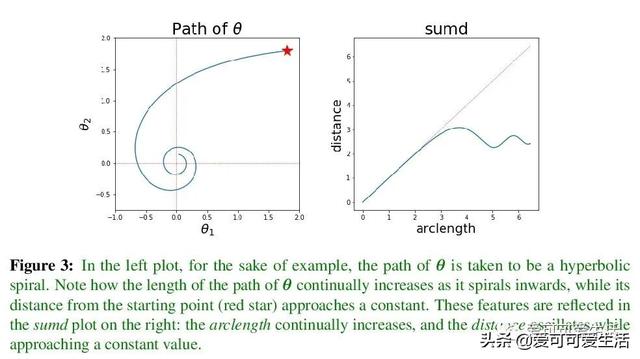

具有自适应学习率的梯度下降算法族Neograd , 用基于更新错误率指标ρ的公式化估计 , 可在训练的每一步动态调整学习速率 , 而不必试运行以估计整个优化过程的单一学习速率 , 增加的额外成本微不足道 。 该算法族成员NeogradM , 可迅速达到比其他一阶算法低得多的代价函数值 , 性能有很大提高 。

Since its inception by Cauchy in 1847, the gradient descent algorithm has been without guidance as to how to efficiently set the learning rate. This paper identifies a concept, defines metrics, and introduces algorithms to provide such guidance. The result is a family of algorithms (Neograd) based on a constant ρ ansatz, where ρ is a metric based on the error of the updates. This allows one to adjust the learning rate at each step, using a formulaic estimate based on ρ. It is now no longer necessary to do trial runs beforehand to estimate a single learning rate for an entire optimization run. The additional costs to operate this metric are trivial. One member of this family of algorithms, NeogradM, can quickly reach much lower cost function values than other first order algorithms. Comparisons are made mainly between NeogradM and Adam on an array of test functions and on a neural network model for identifying hand-written digits. The results show great performance improvements with NeogradM.

文章插图

文章插图

文章插图

文章插图

文章插图

文章插图

- SOTA论文也未必能被接收,谷歌科学家谈顶会审稿标准

- Transformer竞争对手QRNN论文解读更快的RNN

- 滤波器|刷新滤波器剪枝的SOTA效果,腾讯优图论文入选NeurIPS2020

- 前瞻网|建议换CEO?硕士论文研究董明珠自恋:自恋人格导致格力多元化失败

- |四成SCI论文涉嫌大量图片造假,这个作者的PS技术可能有点厉害!

- 可可爱科技|Note20 Ultra引领万物互联,多项黑科技加持,三星Galaxy

- 宽哥玩数码|帮你保持领先地位,回归根基:5篇必读的数据科学论文

- 科技排头|Ps把AI论文demo打包实现了:照片上色、改年龄、换表情

- 小皇可可|实用又容易操作的磁盘管理工具推荐

- 可可酱|T1 Lite评测:iPhone12不送耳机就买它,FIIL