Python爬取论文信息保存到MYSQL数据库,论文素材有了

1.设计要爬取的字段 , 设计数据库表

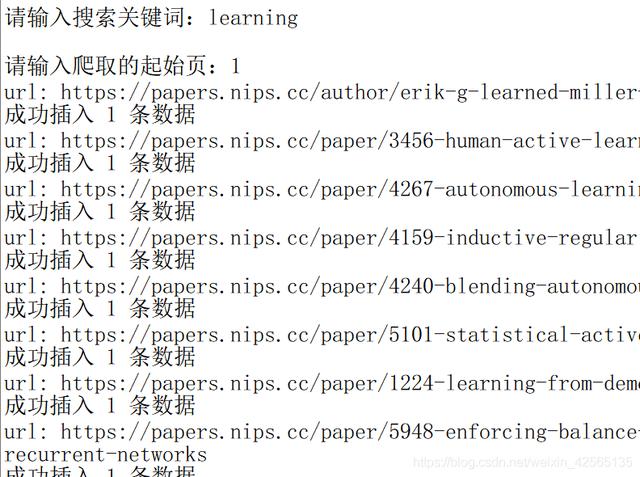

2.进行爬取 , 在控制台可以看到爬取的结果

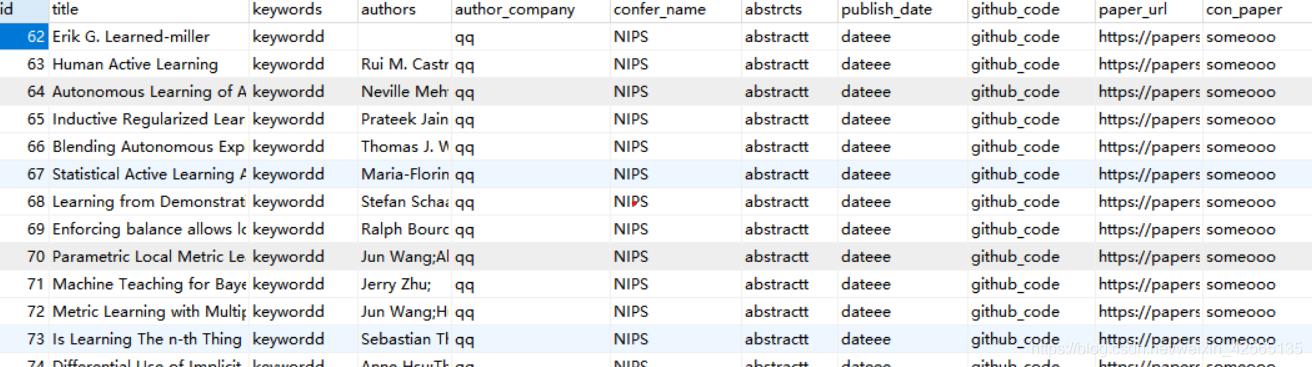

3.将爬取的结果保存到数据库中

4.查看数据库

这里只爬取NIPS上论文的题目、作者、摘要做测试 , 后续将涉及所有字段的爬取 。

代码

craw_db.py:import pymysql import requestsfrom bs4 import BeautifulSoupdef parse_html(url):#使用beautifulSoup进行解析response = requests.get(url)soup = BeautifulSoup(response.text,'html.parser')#题目title = soup.find('h2',{'class':"subtitle"})title=title.text+""# 作者author = soup.find_all('li',{'class':"author"})authors=""forauthor_a in author:authors= authors+author_a.find('a').text+';'# 第一作者单位author_company= "qq"# 关键词keywords ="keywordd"#摘要abstrcts = soup.find('h3',{'class':"abstract"})if abstrcts:abstrcts = abstrcts.text.strip()else:abstrcts="abstractt"#会议名称confer_name = "NIPS"#会议时间publish_date ="dateee"github_code="github_code"paper_url=urlcon_paper="someooo"connect = pymysql.Connect(host='localhost',port=3306,user='root',passwd='自己的数据库密码',db='craw_con',charset='utf8') # 获取游标cursor = connect.cursor() # 插入数据sql = "INSERT INTO huiyi(title,keywords,authors,author_company,confer_name,abstrcts,publish_date,github_code,paper_url,con_paper) VALUES ( '%s','%s','%s','%s','%s','%s','%s','%s','%s','%s')"data = http://kandian.youth.cn/index/(title,keywords,authors,author_company,confer_name,abstrcts,publish_date,github_code,paper_url,con_paper)cursor.execute(sql % data)connect.commit()print('成功插入', cursor.rowcount, '条数据') # 关闭连接cursor.close()connect.close() def main(url):#发送请求、获取响应#解析响应parse_html(url)craw_todb.pyimport reimport timefrom bs4 import BeautifulSoupimport requestsfrom requests import RequestExceptionimport craw_dbfrom lxml import etreedef get_page(url):try:# 添加User-Agent , 放在headers中 , 伪装成浏览器headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'}response = requests.get(url, headers=headers)if response.status_code == 200:response.encoding = response.apparent_encodingreturn response.textreturn Noneexcept RequestException as e:print(e)return Nonedef get_url(html):url_list = []soup = BeautifulSoup(htm1.content, 'html.parser')ids=soup.find('div',{'class':"main wrapper clearfix"}).find_all("li")for id in ids:a=id.find('a')url_list.append(a.attrs.get('href'))return url_list def get_info(url):craw_db.main(url)if __name__ == '__main__':headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'}key_word = input('请输入搜索关键词:')# 可以交互输入 也可以直接指定# 从哪一页开始爬 爬几页start_page = int(input('请输入爬取的起始页:'))base_url = '{} --tt-darkmode-color: #999999;">结果 文章插图

文章插图

文章插图

文章插图

【Python爬取论文信息保存到MYSQL数据库,论文素材有了】剩下的你知道该怎么做了吧 , 这个不需要教你吧!

PS:如遇到解决不了问题的小伙伴可以加点击下方链接自行获取

python免费学习资料以及群交流解答后台私信小编01即可

- 顶级|内地高校凭磁性球体机器人首获机器人顶级会议最佳论文奖

- NeurIPS 2020论文分享第一期|深度图高斯过程 | 深度图

- 主题|GNN、RL崛起,CNN初现疲态?ICLR 2021最全论文主题分析

- 告诉|阿里大佬告诉你如何一分钟利用Python在家告别会员看电影

- Python源码阅读-基础1

- Python调用时使用*和**

- 如何基于Python实现自动化控制鼠标和键盘操作

- 解决多版本的python冲突问题

- 学习python第二弹

- 爱可可AI论文推介(10月17日)