kubeadm部署单master节点( 七 )

再使用输出的token登陆dashboard即可 。

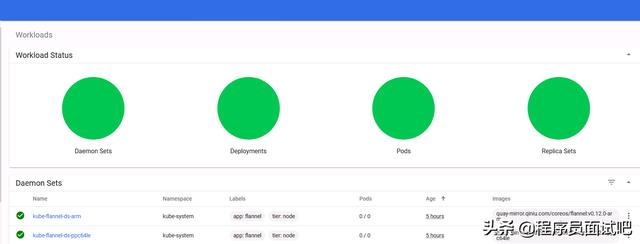

文章插图

文章插图

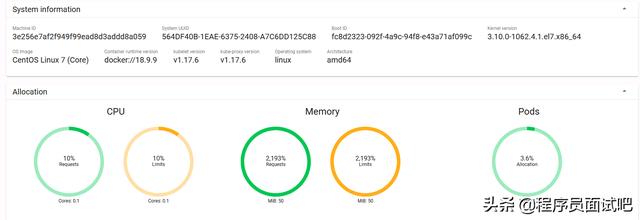

文章插图

文章插图

4、集群报错总结(1)拉取镜像报错没有找到[root@master ~]# kubeadm config images pull --config kubeadm-init.yamlW0801 11:00:00.7050442780 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]failed to pull image "registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.4": output: Error response from daemon: manifest for registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.4 not found: manifest unknown: manifest unknown, error: exit status 1To see the stack trace of this error execute with --v=5 or higher选择拉取的kubernetes镜像版本过高 , 因此需要降低一些 , 修改kubeadm-init.yaml中的kubernetesVersion即可 。

(2)docker存储驱动报错在安装kubernetes的过程中 , 经常会遇见如下错误

failed to create kubelet: misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd"原因是docker的Cgroup Driver和kubelet的Cgroup Driver不一致 。

1、修改docker的Cgroup Driver 修改/etc/docker/daemon.json文件

{"exec-opts": ["native.cgroupdriver=systemd"]}重启docker即可

systemctl daemon-reloadsystemctl restart docker(3)node节点报localhost:8080拒绝错误node节点执行kubectl get pod报错如下:

[root@node1 ~]# kubectl get podThe connection to the server localhost:8080 was refused - did you specify the right host or port?出现这个问题的原因是kubectl命令需要使用kubernetes-admin密钥来运行

解决方法:

在master节点上将/etc/kubernetes/admin.conf文件远程复制到node节点的/etc/kubernetes目录下 , 然后在node节点配置一下环境变量

[root@node1 images]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile[root@node1 images]# source ~/.bash_profilenode节点再次执行kubectl get pod:

[root@node1 ~]# kubectl get podNAMEREADYSTATUSRESTARTSAGEnginx-f89759699-z4fc21/1Running020m(4)node节点加入集群身份验证报错[root@node1 ~]# kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef \>--discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3eW0801 11:06:05.8715572864 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.[preflight] Running pre-flight checkserror execution phase preflight: couldn't validate the identity of the API Server: cluster CA found in cluster-info ConfigMap is invalid: none of the public keys "sha256:a74a8f5a2690aa46bd2cd08af22276c08a0ed9489b100c0feb0409e1f61dc6d0" are pinnedTo see the stack trace of this error execute with --v=5 or higher密钥复制的不对 , 重新把master初始化之后的加入集群指令复制一下 ,

(5)初始化master节点时 , swap未关闭

- 在kubernetes中部署企业级ELK并使用其APM

- helm3部署milvus集群部署

- Jenkins发布PHP项目之一自动化部署

- 华为高管:5G网络部署初期,用户体验差

- 5G小基站沙龙 | 小基站将迎来规模化部署,凌华科技助力5G赋能千行百业

- 八戒谈科技|边缘计算、轻松部署新一代ES-2000系列嵌入式3D相机系统,深惠视发布双目相机

- FinPoints|清算桥是什么?FinPoints 深度解析“桥”的重要性1. 市场深度更佳2. 低延迟的交易执行和实时价格交付3. 新技术4.高效部署,MT5

- 风月无关|将在月球部署4G移动网络,NASA正与诺基亚合作

- 金十数据|我国回应:望重新审视相关决定,瑞典欲绕开华为中兴部署5G网络

- 科技实验室|又一重要部署!JDL京东物流要用科技改变供应链未来